Nanotechnology Gets Real

Ben Goertzel

March 1, 2002

One of the most amazing revelations that the scientific world-view has brought us is the number of different scales in the universe. Pre-civilized cultures had the concept of a scale bigger than the everyday – associated with the “heavens,” and usually with gods of some sort. The ancient Greeks conceived of a smaller “atomic” scale, and other ancient cultures like the Indians had similar ideas; but these were not fleshed out into detailed pictures of the microworld, because they were pure conceptual speculations, ungrounded in empirical observation. When Leeuwenhoek first looked through his microscope, he discovered a whole other world down there, with a rich dynamic complexity no one had imagined in any detail. Galileo and the other pioneers of astronomy had the same experience with the telescope. Yes, everyone had known the stars were up there: but so many of them! … so much variety! And the planets – the moon -- the shapes and markings on them -- !

Now we take for granted that the world we daily see and act in is just a very small scale slice taken out of all the scales of the cosmos. There are vast complex dynamic processes happening on the galactic scale, in which our sun and planet are just tiny insignificant pieces. And there are bafflingly complex things going on in our bodies – antibodies collaborating with each other to fight germs, enzymatic networks carrying out subtle computations … quantum wave functions interfering mysteriously to produce protein dynamics that interpret obscure DNA code sequences….

Until recently our participation in non-human scale processes has been basically limited to observation and experimentation. We look at the planets; we don’t create new planets, or even move around existing ones. As of this century, we do create new molecules, but it’s a hellishly difficult process. We probe particles and their interactions in our particle accelerators, but to measure or understand anything at this tiny scale we need very carefully controlled conditions.

But this is going to change. In time, technology will allow us to act as well as observe on the full range of scales. And it seems likely that we’re going to master the realm of the very small well before the realm of the very large. Manufacturing has been pushing in this direction for decades now: machines just keep getting smaller and smaller and smaller. The circuitry controlling the computer I’m typing these words on, is far too small for me to see with the naked eye.

The next step along this path, the purposeful construction of molecular-level machinery, has been christened as nanotechnology. The most ambitious goals of nanotechnology still live in the future: the construction of molecular assemblers, machines that are able to move molecules into arbitrary configurations, playing with matter like a child plays with Play-doh and Legos. But there are concrete scientific research programs moving straight in this direction; most ambitiously at the Zyvex Corporation, which has hired an impressive staff of nanotechnology research pioneers. And there are more specialized sorts of nanomachines in production right now: from teeny tiny transistors to DNA computers to human-engineered proteins the likes of which have never been seen in nature. Exactly how many years it will be until we can cure diseases by releasing swarms of millions of germ-killing nanobots into the bloodstream, is hard to say. But the door to the nanorealm has been opened -- we’ve stepped through the doorway -- and bit by bit, year by year, we’re learning our way around inside….

How Small is Nano?

It’s hard for the human mind to grasp the tiny scales involved here. One way to conceptualize these scales is to look at the metric system’s collection of prefixes for dealing with very small quantities. We all learned about centi and micro in school. Computer technology has taught us about the scales beyond kilo: mega, giga, tera and soon peta. Over the next few decades we’ll be developing more and more commonsense collective intuition for nano, pico, femto and perhaps even the scales below.

|

prefix |

abbreviation |

Meaning |

example |

a rough sense of

scale |

|

micro |

µ |

10-6 |

microliter, 106 µL = 1 L |

1 µL ~ a very tiny drop of water |

|

nano |

n |

10-9 |

nanometer, 109 nm = 1 m |

radius of a chlorine atom in Cl2 ~ 0.1 nm or

100 pm |

|

pico |

p |

10-12 |

Picogram, 1012 pg = 1 g |

mass of bacterial cell ~ 1 pg |

|

femto |

f |

10-15 |

femtometer, 1015 fm = 1 m |

radius of a proton ~ 1 fm |

|

atto |

a |

10-18 |

attosecond, 1018 as = 1 s |

time for light to cross an atom ~ 1 as |

|

zepto |

z |

10-21 |

zeptomole, 1021 zmol = 1 mol |

1 zmol ~ 600 atoms or molecules |

|

yocto |

y |

10-24 |

yoctogram, 1024 yg = 1 g |

1.7 yg ~ mass of a proton or neutron |

Technology at the scale of a micrometer, a millionth of a meter, isn’t even revolutionary anymore. Micromachinery, machines the size of a miniscule water droplet, are relatively easily achievable using current engineering technology. The nanometer scale, a billionth of a meter, is where today’s most pioneering tiny-scale research aims.

Nano is atomic/molecular scale. Think about this: Everything we see around us is just a configuration of molecules. But out of the total number of possible molecular configurations, existing matter represents an incredibly small percentage – basically zero percent. Nearly all possible types of matter are as yet unknown to us. If we create machines that can piece molecules together in arbitrary ways, total control over matter is ours. This is the power and the wonder of nanotechnology.

As the above scale chart shows, it’s quite possible to get smaller than nanotechnology. A protein is about one femtometer across. Femtotechnology, the arbitrary reconfiguration of particles, may hold even greater rewards one day. The construction of novel forms of matter via the assemblage of known molecules may one day be viewed as limiting. But femtotechnology today is where nanotechnology was 50 years ago – abstract though enticing; a pipe dream for the moment. One may even nurse dreams beyond femtotechnology – for the sci-fi-fueled imagination, it’s not so hard to imagine dissecting quarks and intermediate vector bosons, perhaps reconfiguring being and time themselves.

But what’s fascinating about nanotechnology right now is that, more and more rapidly each year, it’s exiting the domain of wild dreams and visions -- and becoming fantastically real.

Plenty of Room at the Bottom

The amazing thing, from a 2002 perspective, is how ridiculously long it took the mainstream of science and industry to understand that the future lies in smaller, smaller, smaller. Physicist Richard Feynman laid out the point very clearly in a 1959 lecture, “There’s Plenty of Room at the Bottom”. Today the lecture is a legend; at the time it was pretty much ignored. The whole text of the talk is online at http://www.zyvex.com/nanotech/feynman.html, and it makes for pretty fascinating reading.

Feynman laid out his vision clearly and simply:

They

tell me about electric motors that are the size of the nail on your small

finger. And there is a device on the market, they tell me, by which you can

write the Lord's Prayer on the head of a pin. But that's nothing; that's the

most primitive, halting step…. It is a staggeringly small world that is below.

In the year 2000, when they look back at this age, they will wonder why it was

not until the year 1960 that anybody began seriously to move in this direction.

Why

cannot we write the entire 24 volumes of the Encyclopedia Brittanica on the

head of a pin?

Though 1959 was well before the

structure of DNA was understood, molecular biology was already a growing field,

and many physicists were looking to it for one sort of inspiration or

another. Quantum pioneer Schrodinger

wrote a lovely, speculative little book What Is Life?, describing DNA as

an “aperiodic crystal,” which motivated many physicists to give biology a

careful look. And Feynman had the

open-mindedness to look at the barely-newly-charted world of proteins and

enzymes, and view it not merely as biology but as molecular machinery:

This

fact---that enormous amounts of information can be carried in an exceedingly small

space---is, of course, well known to the biologists, and resolves the mystery

which existed before we understood all this clearly, of how it could be that,

in the tiniest cell, all of the information for the organization of a complex

creature such as ourselves can be stored. All this information---whether we

have brown eyes, or whether we think at all, or that in the embryo the jawbone

should first develop with a little hole in the side so that later a nerve can

grow through it---all this information is contained in a very tiny fraction of

the cell in the form of long-chain DNA molecules in which approximately 50

atoms are used for one bit of information about the cell.

Well before PC’s and the like existed, Feynman proposed molecular computers. “The wires should be 10 or 100 atoms in diameter, and the circuits should be a few thousand angstroms across….” This, he foresaw, would easily enable AI of a sort: computers that could “make judgments” and meaningfully learn from experience.

Feynman didn’t give engineering details, but he did give an overall conceptual approach to nanoscale engineering, one that is still in play today. Long before nanotechnology, Jonathan Swift wrote:

So, naturalists observe, a flea

Hath smaller fleas that on him prey;

And these have smaller fleas to bite 'em,

And so proceed ad infinitum

More recently, Dr.

Seuss, in the classic children’s book The Cat in the Hat Comes Back,

told the tale of the Cat in the Hat, in whose hat resides yet a smaller cat, in

whose hat resides yet a smaller cat… and so on for 26 layers until the final,

invisibly small cat contains a mystery substance called Voom! Feynman’s proposal was similar. Build a small machine, whose purpose is to

build a smaller machine, whose purpose is to build a smaller machine, whose

purpose is to build a smaller machine, and so on. The Voom! happens when one reaches the molecular

level. Dr. Seuss’s Voom! merely

cleaned up all the red gunk that the Cat in the Hat and his little cat friends

had smeared all across the snow in the yard of their young human friends. Feynman’s Voom!, on the other hand,

promises the ability to reconfigure the molecular structure of the universe, or

at least a small portion of it.

Today this kind of nanofuturist rhetoric is commonplace. In 1959 it was the kind of eccentricity that could be tolerated only in a scientist whose greatness was established for more conventional achievements. The technology wasn’t there to make nanotechnology real back then; but a little more attention to the idea would surely have gotten us more rapidly to where we are today.

Eric Drexler’s Vision

Eric Drexler

http://www.homestead.com/nanotech/drexler.html

After Feynman, the next major nanovisionary to come along was Eric Drexler. His fascination with the nanotech idea grew progressively as he did his interdisciplinary undergrad and graduate work at MIT during the 1970’s and 80’s. Drexler coined the term “nanotechnology,” and he presented the basic concepts of molecular manufacturing in a scientific paper in 1981, published in the Proceedings of the National Academy of Sciences. His 1986 book Engines of Creation introduced the notion of nanotechnology to the scientific world and the general public, giving due credit to Feynman and other earlier conceptual and practical pioneers. His 1992 work Nanosystems went into far more detail, presenting for a scientific audience a dizzying array of detailed designs for nanoscale systems: motors, computers, and more and more and more. Most critically, he proposed designs for molecular assemblers: machines that could create new molecular structures to order, allowing the creation of new forms of matter to order.

Drexler’s design work as presented in Nanosystems was a kind of science fiction for the professional scientist. He described what plausibly could be built in the future, consistently with physical law, given various reasonably assumptions about future engineering technology. Whether things will ever be built exactly according to Drexler’s designs is doubtful. How close future technology will come to a detailed implementation of his visions is unknown. But the conceptual and inspirational power of his work is undeniable.

A taste of his thinking is conveyed in the following chart, which shows how Drexler maps conventional manufacturing technologies into molecular structures and dynamics. The analogies given in this chart were, of course, just the starting-point for his vast exploration into the domain of speculative nanosystem design. This is high-level, big-picture scientific vision-building at its best.

|

Technology |

Function |

Molecular example(s) |

|

Struts, beams, casings |

Transmit force, hold positions |

Microtubules, cellulose, mineral structures |

|

Cables |

Transmit tension |

Collagen |

|

Fasteners, glue |

Connect parts |

Intermolecular forces |

|

Solenoids, actuators |

Move things |

Conformation-changing proteins, actin/myosin |

|

Motors |

Turn shafts |

Flagellar motor |

|

Drive shafts |

Transmit torque |

Bacterial flagella |

|

Bearings |

Support moving parts |

Sigma bonds |

|

Containers |

Hold fluids |

Vesicles |

|

Pipes |

Carry fluids |

Various tubular structures |

|

Pumps |

Move fluids |

Flagella, membrane proteins |

|

Conveyor belts |

Move components |

RNA moved by fixed ribosome (partial analog) |

|

Clamps |

Hold workpieces |

Enzymatic binding sites |

|

Tools |

Modify workpieces |

Metallic complexes, functional groups |

|

Production lines |

Construct devices |

Enzyme systems, ribosomes |

|

Numerical control systems |

Store and read programs |

Genetic system |

Drexler’s Analogies Between Mechanical and Molecular Devices

In the years since his initial publications, Drexler has made a name for himself as a general-purpose futurist, and a thinker on the social and ethical implications of nanotechnology. In fact, he says, ethical concerns delayed his publication of his initial ideas by several years. "Some of the consequences of the potential abuse of this technology frankly scared the hell out of me. I wasn't sure I wanted to talk about it publicly. After a while, though, I realized that the technology was headed in a certain direction whether people were paying attention to the long-term consequences or not. It then made sense to publish and to become more active in developing these ideas further." He founded the Foresight Institute, which deals with general social issues related to the future of nanotechnology; and in recent years has extended its scope a bit further, dealing with future technology issues in general.

Along with his move into a role as a general-purpose futurist, Drexler has consistently been helpful to others in the general techno-futurist community. For instance, when the Alcor Life Extension Foundation, a California cryonics lab, got into legal trouble for allegedly removing and freezing a client's head before she was declared legally dead, Drexler came to their rescue. He supplied a deposition in their defense, arguing that in the future nanotechnology quite plausibly would allow a person’s mind to be reconstructed from their frozen head. His position at Stanford at the time gave him a little more clout with the court system than the Alcor staff, who were viewed by some as crazy head-freezing eccentrics. Alcor survived the lawsuit.

As the story of nanotech is still unfolding, the real nature of Eric Drexler’s legacy isn’t yet clear. Perhaps history will view him merely as having done what Feynman tried and failed to do in his 1959 lecture: woken up the world to the engineering possibilities of the very small. This is the perspective taken by some contemporary nanoresearchers, whose pragmatic ideas have little to do in detail with Drexler’s more science-fictional Nanosystems proposals. On the other hand, it may well happen that as nanotechnology matures, more and more aspects of Drexler’s ambitious designs will become relevant to practical work. Only time will tell – and given the general acceleration of technological development, perhaps not as much time as you think.

Two Ways to Make Things from Molecules

Very broadly speaking, there are two ways to make a larger physical object out of a set of smaller physical objects. You can do it manually, step by step; or you can somehow coax the smaller objects to self-organize into the desired larger form.

More specifically, in the molecular domain, the two techniques widely discussed are self-assembly and positional assembly. Self-assembly is how biological systems do it: there is no engineer building organisms out of DNA and other biomolecules, the organism builds itself. Eric Drexler’s work, on the other hand, is largely inspired by analogies to conventional manufacturing, and tends to take a positional assembly oriented view.

In self-assembly, a bunch of molecules are allowed to move around randomly, jouncing in and out of various configurations. Over time, more stable configurations will tend to persist longer, and a high-level structure will self-assemble. For instance, two complementary strands of DNA, if left to drift around in an appropriate solution for long enough, will end up bumping into each other and then grasping together in a double-helix. And a protein, a one-dimensional strand of amino acids, will wiggle around for a while, and then eventually curl up into a 3D structure that is determined by its 1D amino acid sequence. There can be a lot of subtlety here; for instance, the protein-folding process tends to be nudged along by special “helper” molecules that enable a protein to more rapidly find the right configuration.

But we don’t build a TV by throwing the parts into a soup and waiting for them to lock together in the appropriate overall configuration. Rather, we hold the partially-constructed TV in a fixed position and then attach a new part to it – and repeat this process over and over. This is positional assembly. The problem with doing this on the molecular scale is that it requires us to have the ability to: a) hold a molecule or molecular structure in place, recording its precise location and b) grab a molecule or molecular structure and move it from one precise location to another. Neither of these things is easy to do using current technology. However, approaches to both problems are under active development.

Drexler’s design for a molecular assembler is based on positional assembly. It is assumed that the molecules in question are in vacuum, so there are no other molecules bouncing into them and jouncing them around. It assumes that, unlike in traditional chemistry and biology, highly reactive intermediate structures can be constructed without reacting with one another willy-nilly, because they’re held in place.

There is a lot of power in self-assembly: each one of us is evidence of this! On the other hand, positional assembly can achieve a degree of precision that self-assembly lacks, at least in the biological domain. Each person is a little different, because of the flexibility of the self-assembly process, and this is good from an evolutionary perspective – without this diversity, new forms wouldn’t evolve. On the other hand, it’s also good that, if we have to have nuclear missiles, each one of them is close to exactly identical: in this context, variation ensuing from biological-style self-assembly could lead to terrible consequences. Less dramatically, we probably wouldn’t want this kind of variation in our auto engines either.

Crystals, if grown under appropriate conditions, are examples of self-assembled structures that have the precision of positionally-constructed machines. But they are much simpler than the biological molecules that Schroedinger called “aperiodic crystals,” let alone than whole organisms, computers or motors. Whether new forms of self-assembly combining precision with complexity will emerge in the future, is hard to say. One suspects that some combination of positional and self-assembly will one day become the standard. Positionally constructed components may self-assemble into larger structures; and self-assembled components may be positionally joined together in specific ways. Contemporary manufacturing technology is astoundingly diverse, and we can expect nanomanufacturing methods to be even more so.

Zyvex: Molecular Assemblers Go Commercial

Dozens of companies today are involved in nanotechnology in one way or another (see http://www.homestead.com/nanotechind/companies.html for a partial list). It’s also a serious pursuit of major research labs such as Los Alamos and Sandia. Perhaps the most ambitious nanotech business concern, however, is the Texas firm Zyvex. Zyvex makes no bones about its goal: it wants to construct a Drexler-style molecular assembler, and play a leading role in the revolution that will ensue from this. The Zyvex R&D group has outlined a program that it believes will lead to this goal. And unlike some other contemporary nanotech approaches that we’ll discuss below, Zyvex’s approach is pretty much squarely in the positional assembly camp.

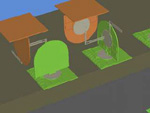

Zyvex has

two distinct research groups, called Top-Down and Bottom-Up. The Bottom-Up team deals explicitly with

molecule manipulation. The Top-Down

team, on the other hand, works largely with micromanufacturing. They’re currently prototyping assemblers

using microsopic but non-nanoscale components that can be built for relatively low cost.

Using relatively straightforward contemporary technologies,

one can create devices measuring

tens or hundreds of microns, with individual feature sizes of about one micron.

After

prototypes at the micron scale have been achieved, extension to the nanoscale

will be attempted. Of course, the

micron-scale machinery developed along the way to true nanotechnology may also

have significant practical applications, which Zyvex may commercialize.

One approach the Zyvex Top-Down team is pursuing is called exponential assembly. Basically this means small machines building more and more small machines. If each machine builds two other machines, then the total number of machines will grow exponentially. This is currently being applied specifically in the context of robot arms. Robot arm technology is by far the most mature aspect of modern robotics; factories worldwide now use robot arms to automate assembly processes. In Zyvex’s plan, the first robot arm picks up miniature parts carefully laid out for it, and assembles them into a second robot arm. The two robot arms then build two more robot arms, when then build four more robot arms, and so forth.

Zyvex’s Exponentially Assembling Robot Arms

www.zyvex.com

This is a first step toward Feynman’s “small machines building yet smaller machines” idea. Once exponential assembly of same-sized robot arms has been completed, the next step is having robot arms assemble slightly smaller robot arms. And so proceed ad infinitum.

On the Bottom-Up side, long-time

nanotechnologist and Zyvex principal scientist Ralph Merkle has created a set of impressively detailed designs for

components of molecular engines, computers and related devices. For instance, the following figure shows one

of Merkle’s designs for a molecular bearing.

Ring-shaped molecules are plentiful in nature. Just create two rings, one slightly smaller than another – insert

the small one in the big one – and presto, molecular bearing!

Insert one molecular ring into another, and get a molecular bearing

http://www.zyvex.com/nanotech/compNano.html

Merkle has put a fair amount of effort into figuring out how to make a molecular computer that doesn’t create too much heat. This involves the notion of reversible computing. Thermodynamics teaches us that the creation of heat is connected with the execution of irreversible operations – operations that lose information, so that after they’re completed, you can’t accurately “roll back” and figure out what the state of the world was like before the operation was carried out. Ordinary computers are based on irreversible operations; for instance a basic OR gate outputs TRUE if either of its two inputs is TRUE. From the output of the OR gate, one can’t roll back and figure out which of the two inputs was TRUE. This kind of irreversible logical operation, when implemented directly on the physical level, creates heat. Fortunately, it’s possible to implement logical operations in a fully reversible manner, at the cost of using up more memory space than is required for normal irreversible computing.

Maverick scientist Ed Fredkin pioneered the theory of reversible computing years ago, in the context of his radical theory that the universe is a giant computer (the theory of universe as an irreversible computer has fatal flaws, but the theory of the universe as a reversible computer is not so obviously false). Fredkin’s posited universal computer would operate below the femto scale, giving rise to quarks, leptons, protons and the whole gamut of particles as emergent patterns in its computational activity. Merkle’s proposed reversible computer components are conservative by comparison, merely involving novel configurations of molecules. The details of molecular reversible computing are not yet known, but it seems likely that they’ll involve carrying out operations at very low temperatures, and that true reversibility won’t be achieved – we don’t need zero heat, if we can come close enough.

Tiny Transistors

Zyvex’s work in tiny-scale engineering is explicitly aimed at Drexler-ish long-term goals. But loads of other similar fascinating work is being carried out by other firms, without such clearly stated grandiose ambitions. As a single example, consider Bell Labs’ researchers’ work on tiny transistors.

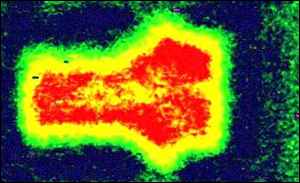

Bell Labs’ Nanoscale Transistor

http://news.bbc.co.uk/hi/english/sci/tech/newsid_528000/528109.stm

Transistors are based on semiconductors – materials like silicon and germanium that conduct electricity better than insulators but not as well as really good conductors. A transistor is a small electronic device that contains a semiconductor, has at least three electrical contacts, and used in a circuit as an amplifier, detector, or switch. Transistors are key components of nearly all modern electronic devices, and some have called them the most important invention of the century. The original transistor was invented at Bell Labs in 1947 (12 years before Feynman’s original nanotech lecture). In 1999, a team of scientists at this same institution succeeded in creating a transistor 50 nanometers across. This is about 1/2000 the width of a human hair. All of its components are built on top of a silicon wafer.

This kind of innovation is essential to the continuation of Moore’s Law, the empirical observation that computer performance doubles roughly every 18 months. Moore’s Law has survived several technology revolutions already. Sometime in the next decade, conventional transistor technology will hit a brick wall, a point at which further incremental improvements will be more and more difficult to come by. At this point, quite probably, nanotransistors – successors to today’s experimental ones -- will be ready to kick in and keep the smaller, faster, better computers coming.

Self-Assembly Using DNA

Positional assembly based nanotech is powerful, in that it allows us to extend our vast knowledge of macro-scale manufacturing and engineering to the nanoworld. On the other hand, the only known examples of complex nano-level machines – biological systems – make use of the self-assembly approach. And there has been some impressive recent progress in using biological self-assembly to create systems with nonbiological purposes.

An excellent example of the self-assembly approach is the construction of stick figures out of DNA, as in the following figure of a DNA truncated octahedron, depicting a real molecular compound constructed in Ned Seeman's lab at New York University.

A Truncated Octahedron Constructed of DNA

http://seemanlab4.chem.nyu.edu/nano-oct.html

In Seeman’s lab, DNA cubes have also been constructed, along with 2D DNA-based crystals and, most ambitiously, simple DNA-based computing circuits. Computer scientists have known for a while how to make simple lattice-based devices that carry out complex computational functions, via the state of each part of the lattice affecting the states of the nearby parts of the lattice. Seeman’s group constructed a system of this nature out of a lattice of DNA.

So far there’s no advanced computation involved here: what they implemented was XOR (“exclusive or”), a simple logic function embodied in a computer by a single logic gate. An XOR gate has two inputs, and gives a TRUE output if one or the other, but not both, of its outputs, are TRUE. Simple, sure -- but it’s out of large numbers of very simple (but much bigger!) gates of this sort that computer chips are constructed. A DNA solution contains a vast number of molecules, and if these can be harnessed for parallel processing, we have a lot more logic-gate-equivalents than there are neurons in the brain.

Work is also underway creating DNA cages, in which cubic lattices of DNA are used to trap other molecules at specific positions and specific angles.

DNA Cages Containing Oriented Molecules

http://seemanlab4.chem.nyu.edu/nano-cage.html

These cages can potentially be used to assemble a complex biocomputer, with DNA structures embodying logic operations feeding data to each other along specified paths. Many noncomputational types of matter could also be synthesized this way.

Creating Novel Proteins

Arranging DNA molecules in novel configurations is exciting and may lead to such things as molecular computers and new forms of matter. But for some purposes one wants to go a little further – not accepting Nature’s biomolecules as given, but rather creating new ones from scratch.

At first, designing new proteins seems like an incredibly difficult problem. The problem is, no one knows how to look at the sequence of amino acids defining a protein molecule’s one-dimensional structure, and predict from it the three-dimensional structure that the protein will fold up into. IBM is currently building the world’s largest supercomputer, Blue Gene, with the specific intention of taking a stab at this fabulously difficult “protein folding” problem.

But the problem of protein engineering is a rare case of something that’s actually easier than it looks. Because some proteins are easier to understand than others. To engineer new proteins, you don’t need to understand all proteins, only those that you want to build. It turns out that there are many unnatural proteins whose folding is more predictable than that of proteins found in nature. Protein engineers strategically place special bonds and molecular groups in their designed proteins, so as to be able to predict how they’ll fold up.

The transcription factor NF-kB p50 bound to DNA

http://www.bioc.cam.ac.uk/UTOs/Blackburn.html

For example Jonathan Blackburn at the University of Cambridge is working on the creation of new proteins that bind to DNA, and whose binding can be controlled by small molecules. The potential value of this is enormous. Ultimately, it could allow us to create an explicitly controllable genetic process, with the small molecules used as interactive “control switches.” In the shorter run, there are fantastic implications for genetic medicine and pharmacology in general.

Nanomedicine

The likely long-term applications of nanotechnology are far too numerous to list here. If you can configure arbitrary forms of matter, heck, what can’t you do? All current forms of manufacturing and engineering are ridiculously pathetic by comparison.

One of the more exciting medium-term applications of nanotech, however, is in the medical domain. Robert Freitas, in his 1999 book Nanomedicine, has explored this space in great detail, taking a Drexler-like approach of exploring what will likely be possible given future technologies.

Artist’s Rendition of a Respirocyte Nanobot Among Red Blood Cells

http://www.foresight.org/Nanomedicine/Gallery/Species/Respirocytes.html

For example, one of his inventions is the respirocyte, a bloodborne 1-micron-diameter spherical nanomedical device that acts as an artificial mechanical red blood cell. This will basically be a little tiny vessel full of oxygen pressurized to 1000 atmospheres. The pumping out of oxygen will be accomplished chemically by endogenous serum glucose, and if it’s built as currently designed, it should be able to deliver 236 times more oxygen to the tissues per unit volume than natural red cells. In addition to numerous medical applications, pumping your body full of some of these would allow you to hold your breath for hours underwater.

Artist’s

Rendition of a Cell Rover Nanobot

http://www.foresight.org/Nanomedicine/Gallery/Captions/Image135.html

Another

envisioned medical nanobot is the “cell rover.” This one would be built by piecing together traditional and/or

custom-designed biomolecules. It would

zoom through the bloodstream, performing special medical tasks like removing

toxins, delivering drugs, or simple cell repair. The internal frame would be made of keratin, chitin or calcium

carbonate; the skin panels would be made of lipids (fats). Movement could be achieved by various

biological mechanisms such as bacterial cilia or flagella, or more original

nanotech designs. A submillimeter band

single-molecule radio antenna could be supplied to allow communication with a

control device and/or other cell rovers.

If the device were made to look like a native cell, the immune system

would leave it alone, allowing it to happily zip about the body doing its

business. The implications of a device

like this for medical science would, obviously, be more than tremendous.

.

Plenty of Room at the Bottom, Indeed

Positional assembly or self-assembly, or some combination therebetween? Cascades of machines, each one producing smaller machines – or subtle modifications of existing biomolecular processes? General-purpose molecular assemblers, or a host of different but overlapping nanoscale assembly processes?

The questions are many. But what’s clear from the vast amount of work doing on is: This isn’t science fiction anymore. Nanotechnology is here right now. At the moment it’s mainly in the research lab, but within the next 5-10 years it will be productized, at least in its simpler incarnations. And the practical experience obtained with things like DNA lattices and micromachines and tiny transistors, will help us flesh out the difficult issues involved in realizing Feynman’s and Drexler’s bigger dreams. The tendency toward miniaturization, that we see all around us with everyday technology like radios, calculators and computers, is going to be far more profound than these examples suggest. The possibilities achievable by reconfiguring matter at will are mind-boggling, but within the 21’st century they may well be within our reach.