A System-Theoretic Analysisof Focused Cognition,

and its Implications forthe Emergence of Self and Attention

Ben Goertzel

Novamente LLC

November4, 2006

Abstract

1. Introduction

Human cognition is complex, involving a combination of multiple complexmechanisms with overlapping purposes. I conjecture that this complexity is not entirely the consequence of the“messiness” of evolved systems like the brain. Rather, I suggest, any system that must perform advancedcognition under severe computational resource constraints will inevitablydisplay a significant level of complexity and multi-facetedness.

This complexity, however, proves problematic for those attempting tograpple with the mind from the perspective of science and engineering.

I believe the complexity of mind can

In (Goertzel, 2006) I follow up further on these concepts in an AGIcontext, showing how the systems theoretic notions introduced here may be usedto give a systematic typology of the cognitive mechanisms involved in theNovamente AGI architecture, and an explanation of why it seems plausible tohypothesize that a fully implemented Novamente system, if properly educated inthe context of an embodiment and shared environment, could give rise to selfand attention as emergent structures.

The first theoretical step taken here is to introduce the generalnotions of forward synthesis and backwardsynthesis. as an elaboration of thetheory of component-systems and self-generating systems proposed in ChaoticLogic; (Goertzel, 1994).

2. AGI and the Complexity of Cognition

The vast bulk of approaches to AI and even AGI, I feel, deal with theproblem of the complexity of cognition by essentially ignoring it.

Other AGI approaches take a hybrid strategy, in which an overallarchitecture is posited and then various diverse algorithms and structures areproposed to fill in the various functions defined by the architecture.

To make this point, I will cite another AGI architecture of which I amvery fond: Stan Franklin’s (2006) LIDA architecture. LIDA is a very wellthought out architecture which is well grounded in cognitive science research,but it is not clear to me whether the combination of learning mechanisms usedwithin LIDA is going to be appropriately chosen and tuned to give rise to theemergent structures and dynamics characteristic of general intelligence, suchas the self and the moving bubble of attention. LIDA is a very general approach, which could be used as acontainer for a haphazard assemblage of learning techniques, or for a carefullyassembled combination of learning techniques designed to lead to appropriateemergence. So, this is not acriticism of LIDA as such, but rather an argument that without concrete choicesregarding the specifics of the learning algorithms, it is not possible to tellwhether or not the LIDA system is going to be plausibly capable of a reasonablelevel of general intelligence.

The Novamente design seeks to avoid these various potential problemsvia the incorporation of a variety of cognitive mechanisms specificallydesigned for effective interoperation and for the induction of appropriateemergent behaviors and structures. I believe this approach is conceptually sound, however it does have thedrawback of leading to a rather complex design in which the accuratedescription and development of each component requires careful consideration ofall other components. For thisreason it is worthwhile to seek simplification and consolidation of cognitivemechanisms, insofar as is possible. In this paper I introduce a conceptual framework that has been developedin order to provide a simplifying unifying perspective on the various cognitivemechanisms existing in the Novamente design, and an abstract and coherentargument regarding the dynamics by which these mechanisms may give rise toappropriate emergent structures.

The framework presented here is a further development of thesystem-theoretic perspective on cognition introduced in Chaotic Logic

In the last couple paragraphs I have explained the historical originsof the ideas to be presented here: the notions of forward and backward synthesiswere originated as part of an effort to simplify the collection of cognitivemechanisms utilized in the Novamente system. These notions were then recognized as possessing potentiallymore general importance. In theremainder of the paper I will proceed in the opposite direction: presentingforward and backward synthesis as general system-theoretic (and mathematical)notions, and exploring their general implications for the philosophy ofcognition. In another paper(Goertzel, 2006) these are applied to provide a systematic typology of thecollection of Novamente cognitive processes.

Furthermore, the (hypothesized, not yet observed in experiments)emergence of self and attention from the overall dynamics of the Novamentesystem, which in prior publications has largely been discussed either in verygeneral conceptual terms or else in terms of the specific interactions betweenspecific system components, may now be viewed as a particular case of thegeneral emergence of self and attention as strange attractors offorward-backward synthesis dynamics. This is often the sort of conclusion one wants to get out of systemstheory. It rarely directly tellsone specific new things about specific systems -- but it frequently allows oneto better organize and understand specific things about specific systems, thusin some cases pointing the way to new discoveries.

3. Forward and Backward Synthesis as General CognitiveDynamics

The notion of forward andbackward synthesis presented here is an elaboration of a system-theoreticapproach to cognition developed by George Kampis and the author in the early1990’s. This section presentsforward and backward synthesis in this general context.

3.1. Component-Systems and Self-Generating Systems

Let us begin with the conceptof a “component-system”, as described in George Kampis’s (1991) book Self-ModifyingSystems in Biology and Cognitive Science,and as modified into the concept of a “self-generating system” or SGS in ChaoticLogic.

Next, in SGS theory there isalso a notion of reduction (not present in the Lego metaphor): sometimes whencomponents are combined in a certain way, a “reaction” happens, which may leadto the elimination of some of the components. One relevant metaphor here is chemistry.

Formally, suppose {C

where Join is a joiningoperation, and Reduce is a reduction operator. The joining operation is assumed to map tuples of componentsinto components, and the reduction operator is assumed to map the space ofcomponents into itself. Of course,the specific nature of a component system is totally dependent on theparticular definitions of the reduction and joining operators; below I willspecify these operators in the context of the Novamente AGI system, but for thepurpose of the general theoretical discussion in this section they may be leftgeneral.

It is also important that(simple or compound) components may have various quantitative properties.

3.2. Forward and Backward Synthesis

Now we move on to the mainpoint. The basic idea put forth inthis paper is that all or nearly all focused cognitive processes areexpressible using two general process-schemata called forward and backwardsynthesis, to be presented below. The notion of “focused cognitive process” will be exemplified morethoroughly below, but in essence what is meant is a cognitive process thatbegins with a small number of items (drawn from memory or perception) as itsfocus, and has as its goal discovering something about these items, ordiscovering something about something else in the context of these items or ina way strongly biased by these items. This is different from, for example, a cognitive process whose goal ismore broadly-based and explicitly involves all or a large percentage of theknowledge in an intelligent system’s memory store.

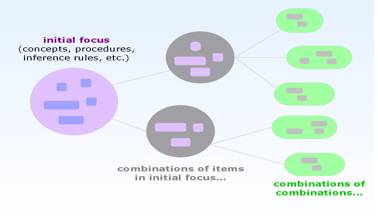

Figure 1.

The forward and backwardsynthesis processes as I conceive them, in the general framework of SGS theory,are as follows:

Forward synthesis:

- Begin with some initial components (the initial “current pool”), an additional set of components identified as “combinators” (combination operators), and a goal function

- Combine the components in the current pool, utilizing the combinators, to form product components in various ways, carrying out reductions as appropriate, and calculating relevant quantities associated with components as needed

- Select the product components that seem most promising according to the goal function, and add these to the current pool (or else simply define these as the current pool)

- Return to Step 2

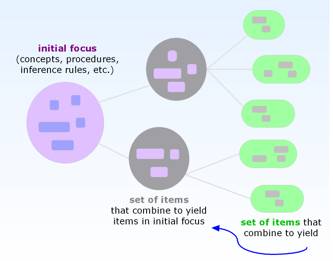

Figure 2.

Backward synthesis:

- Begin with some components (the initial “current pool”), and a goal function

- Seek components so that, if one combines them to form product components using the

- combinators and then performs appropriate reductions, one obtains (as many as possible of) the components in the current pool

- Use the newly found constructions of the components in the current pool, to update the quantitative properties of the components in the current pool, and also (via the current pool) the quantitative properties of the components in the initial pool

- Out of the components found in Step 2, select the ones that seem most promising according to the goal function, and add these to the current pool (or else simply define these as the current pool)

- Return to Step 2

Less technically and moreconceptually, one may rephrase these process descriptions as follows:

Forward synthesis: Iteratively build compounds from the initialcomponent pool using the combinators, greedily seeking compounds that seemlikely to achieve the goal

Backward synthesis: Iteratively search (the system’slong-term memory) for component-sets that combine using the combinators to formthe initial component pool (or subsets thereof), greedily seekingcomponent-sets that seem likely to achieve the goal

More formally, forward synthesis maybe specified as follows. Let Xdenote the set of combinators, and let Y0 denote the initial pool ofcomponents (the initial focus of the cognitive process).

where the Ci aredrawn from Yi or from X. We may then say

where Filter is a functionthat selects a subset of its arguments.

Backward synthesis, on theother hand, begins with a set W of components, and a set X of combinators, andtries to find a series Yi so that according to the process offorward synthesis, Yn=W.

In practice, of course, theimplementation of a forward synthesis process need not involve the explicitconstruction of the full set Zi. Rather, the filtering operation takes place implicitlyduring hte construction of Yi+1. The result, however, is that one gets some subset ofthe compounds producible via joining and reduction from the set of componentspresent in Yi plus the combinators X.

Conceptually one may viewforward-synthesis as a very generic sort of “growth process,” andbackward-chaining as a very generic sort of “figuring out how to grow something.”

1.

2.

3.3. The Dynamic of Iterative Forward-Backward Synthesis

While forward and backwardsynthesis are both very useful on their own, they achieve their greatest powerwhen harnessed together. It is myhypothesis that the dynamic pattern of alternating forward and backwardsynthesis has a fundamental role in cognition. Put simply, forward synthesis creates new mental forms bycombining existing ones. Then,backward synthesis seeks simple explanations for the forms in the mind,including the newly created ones; and, this explanation itself then comprisesadditional new forms in the mind, to be used as fodder for the next round offorward synthesis. Or, to put ityet more simply:

It is not hard to expressthis alternating dynamic more formally, as well.

Let X denote any set ofcomponents.

Let F(X) denote a set of componentswhich is the result of forward synthesis on X.

Let B(X) denote a set ofcomponents which is the result of backward synthesis of X.

Let S(t) denote a set of componentsat time t, representing part of a system’s knowledge base.

Let I(t) denote componentsresulting from the external environment at time t.

Then, we may consider adynamical iteration of the form

This expresses the notion ofalternating forward and backward synthesis formally, as a dynamical iterationon the space of sets of components. We may then speak about attractors of this iteration: fixed points,limit cycles and strange attractors. One of the key hypotheses I wish to put forward here is that some keyemergent cognitive structures are strange attractors of this equation.

4. Self and Focused Attention as Approximate Attractorsof the Dynamic of Iterated Forward/Backward Synthesis

In The Hidden Pattern

In the previous section Ihave described the pattern of ongoing habitual oscillation between forward andbackward synthesis as a kind of “dynamical iteration.”

4.1. Self

The “self” in the presentcontext refers to the “phenomenal self” (Metzinger, 2004) or “self-model”(Epstein, 1978). That is, the selfis the model that a system builds internally, reflecting the patterns observedin the (external and internal) world that directly pertain to the systemitself. As is well known ineveryday human life, self-models need not be completely accurate to be useful;and in the presence of certain psychological factors, a more accurateself-model may not necessarily be advantageous. But a self-model that is too badly inaccurate will lead to abadly-functioning system that is unable to effectively act toward theachievement of its own goals.

The value of a self-model forany intelligent system carrying out embodied agentive cognition isobvious. And beyond this, anotherprimary use of the self is as a foundation for metaphors and analogies invarious domains. Patternsrecognized pertaining the self are analogically extended to otherentities. In some cases this leadsto conceptual pathologies, such as the anthropomorphization of trees, rocks andother such objects that one sees in some precivilized cultures.

A self-model can in manycases form a self-fulfilling prophecy (to make an obviousdouble-entendre’!).

In what sense, then, may itbe said that self is an attractor of iterated forward-backward synthesis?

My hypothesis is that afterrepeated iterations of this sort, in infancy, finally during early childhood akind of self-reinforcing attractor occurs, and we have a self-model that isresilient and doesn’t change dramatically when new instances of action- orexplanation-generation occur. This is not strictly a mathematical attractor, though, because over along period of time the self may well shift significantly.

Finally, it is interesting tospeculate regarding how self may differ in future AI systems as opposed to in humans.

4.2. Attentional Focus

Next, the notion of an“attentional focus” is similar to Baars’ (1988) notion of a Global Workspace: acollection of mental entities that are, at a given moment, receiving far morethan the usual share of an intelligent system’s computational resources.

In the human mind, there is aself-reinforcing dynamic pertaining to the collection of entities in theattentional focus at any given point in time, resulting from the observationthat If A is in the attentional focus, and A and B have often beenassociated in the past, then odds are increased that B will soon be in theattentional focus.

The forward and backwardsynthesis perspective provides a more systematic perspective on thisself-reinforcing dynamic. Forwardsynthesis occurs in the attentional focus when two or more items in the focusare combined to form new items, new relationships, new ideas.

The backward synthesis stagemay result in items being pushed out of the attentional focus, to be replacedby others. Likewise may theforward synthesis stage: the combinations may overshadow and then replace thethings combined. However, in humanminds and functional AI minds, the attentional focus will not be a completechaos with constant turnover: sometimes the same set of ideas – or ashifting set of ideas within the same overall family of ideas -- will remain infocus for a while. When thisoccurs it is because this set or family of ideas forms an approximate attractorfor the dynamics of the attentional focus, in particular for theforward/backward synthesis dynamic of speculative combination and integrativeexplanation. Often, for instance,a small “core set” of ideas will remain in the attentional focus for a while,but will not exhaust the attentional focus: the rest of the attentional focuswill then, at any point in time, be occupied with other ideas related to theones in the core set. Oftenthis may mean that, for a while, the whole of the attentional focus will movearound quasi-randomly through a “strange attractor” consisting of the set ofideas related to those in the core set.

5. Conclusion

The ideas presented above(the notions of forward and backward synthesis, and the hypothesis of self andattentional focus as attractors of the iterative forward-backward synthesisdynamic) are quite generic and are hypothetically proposed to be applicable toany cognitive system, natural or artificial. In another paper (Goertzel, 2006), I get more specific and discuss themanifestation of the above ideas in the context of the Novamente AGIarchitecture. I have found thatthe forward/backward synthesis approach is a valuable tool for conceptualizingNovamente’s cognitive dynamics. And, I conjecture that a similar utility may be found more generally.

Next, so as not to end on tooblase’ of a note, I will also make a stronger hypothesis.

- manifest the dynamic of iterated forward and backward synthesis

- do so in such a way as to lead to self and attentional focus as emergent structures that serve as approximate attractors of this dynamic, over time periods that are long relative to the basic “cognitive cycle time” of the system’s forward/backward synthesis dynamics

To prove the truth of ahypothesis of this nature would seem to require mathematics fairly far beyondanything that currently exists. Nonetheless, however, I feel it is important to formulate and discusssuch hypotheses, so as to point the way for future investigations boththeoretical and pragmatic.

References

Š Baars, Bernard J. (1988). A Cognitive Theory of Consciousness

Š Boden, Margeret (1994). TheCreative Mind. Routledge

Š Epstein, Seymour (1980). The Self-Concept: A Review and the Proposal ofan Integrated Theory of Personality, p. 27-39 in Personality: Basic Issuesand Current Research, Englewood Cliffs: Prentice-Hall

Š Goertzel (2006). VirtualEaster Egg Hunting: A Thought-Experiment in Embodied Social Learning, Cognitive Process Integration, andthe Dynamic Emergence of the Self , Proceedings of 2006 AGI Workshop, Bethesda MD,IOS Press

Š Goertzel, Ben (1993). TheEvolving Mind. Gordon and Breach

Š Goertzel, Ben (1993). TheStructure of Intelligence. Springer-Verlag

Š Goertzel, Ben (1997). FromComplexity to Creativity. Plenum Press

Š Goertzel, Ben (1997). ChaoticLogic.

Š Goertzel, Ben (2002). CreatingInternet Intelligence. Plenum Press

Š Goertzel, Ben and Cassio Pennachin, Editors (2006).

Š Goertzel, Ben and Stephan Vladimir Bugaj (2006).

Š Goertzel, Ben (2006). TheHidden Pattern: A Patternist Philosophy of Mind,

Š Hebb, Donald (1949). TheOrganization of Behavior. Wiley

Š Kampis, George (1991). Component-Systemsin Biology and Cognitive Science. Plenum Press

Š Lakoff, George and Rafael Nunez (2002). Where Mathematics Comes From

Š Lenat, D. and R. V. Guha. (1990). Building Large Knowledge-BasedSystems: Representation and Inference in the Cyc Project

Š Metzinger, Thomas (2004). BeingNo One.

Š Ramamurthy, U., Baars, B., D'Mello, S. K., & Franklin, S. (2006).LIDA: A Working Model of Cognition. Proceedings of the 7th InternationalConference on Cognitive Modeling. Eds: Danilo Fum, Fabio Del Missier and AndreaStocco; pp 244-249. Edizioni Goliardiche, Trieste, Italy.

Š Sutton, Richard and Andrew Barto (1998). Reinforcement Learning. MIT Press.

Š Wang, Pei (2006). RigidFlexibility: The Logic of Intelligence. Springer-Verlag.